Are you grappling with the complexities of managing data processing tasks across a multitude of Internet of Things (IoT) devices, spread across vast geographical distances? The advent of remote IoT batch jobs offers a paradigm shift, streamlining data ingestion, processing, and analysis, thereby unlocking unprecedented levels of efficiency and scalability in the IoT landscape.

The evolution of the Internet of Things has led to an explosion of connected devices, generating massive amounts of data in real-time. Analyzing this data, often referred to as 'big data,' is crucial for informed decision-making, predictive maintenance, and operational optimization. However, the traditional approach of handling data processing centrally, either on-premise or in a cloud environment, presents significant challenges. This method is not only bandwidth-intensive but also introduces latency, particularly when dealing with geographically dispersed devices. Moreover, the sheer volume of data can overwhelm centralized processing resources, leading to performance bottlenecks and increased costs. Remote IoT batch jobs offer a compelling solution by enabling distributed processing, allowing data to be processed closer to the source, thus minimizing latency and optimizing resource utilization.

One pivotal aspect of remote IoT batch jobs involves the concept of "edge computing." Edge computing brings computational capabilities closer to the data source, effectively minimizing the distance data needs to travel for processing. This decentralization is particularly beneficial in scenarios where network connectivity is intermittent or unreliable, or where real-time processing is essential. By running batch jobs on edge devices, organizations can ensure that critical data is processed promptly, even in the absence of a constant internet connection.

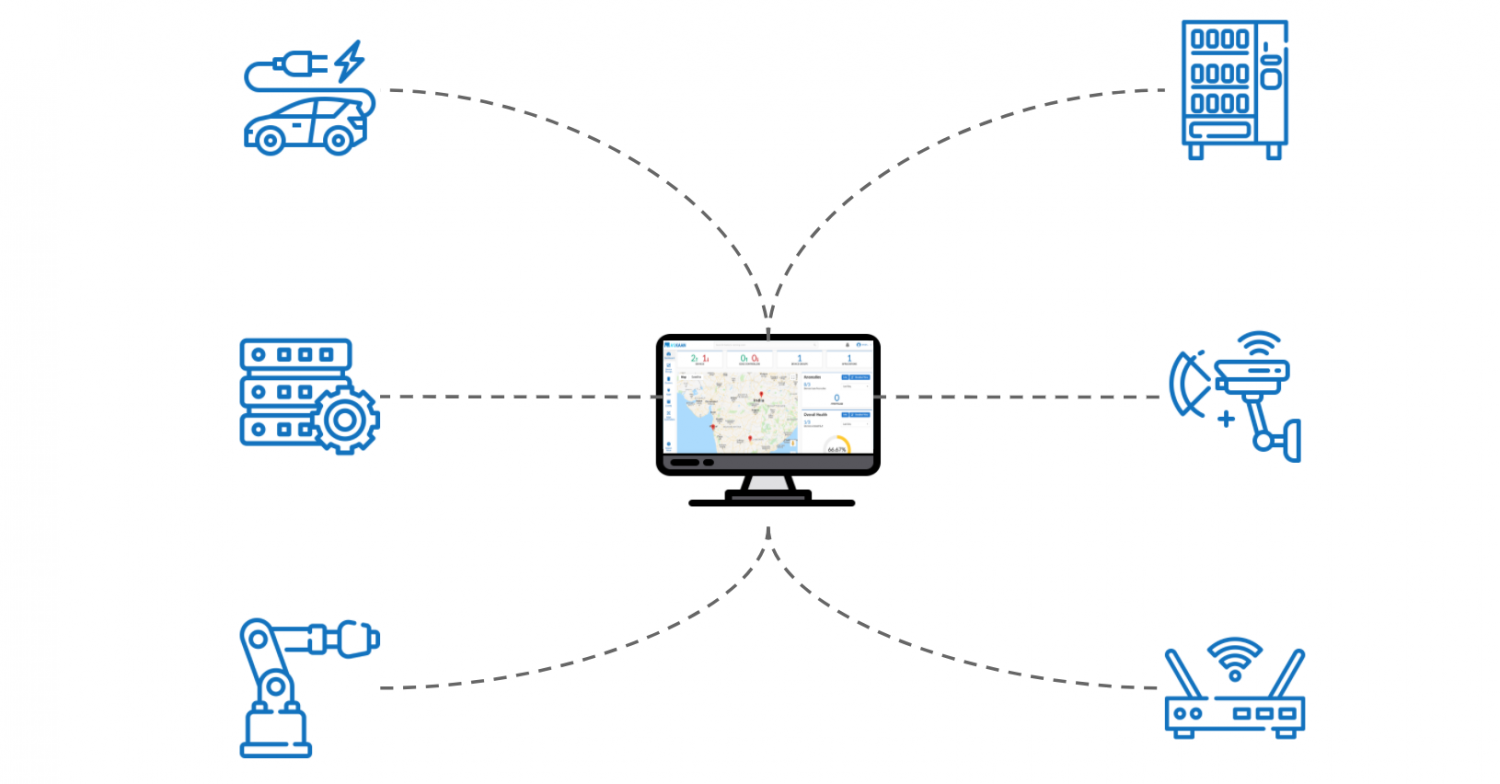

The architecture of remote IoT batch jobs often involves several key components. First, there are the IoT devices themselves, which generate data and act as the initial data source. Next comes the edge infrastructure, comprising devices with processing capabilities located near the IoT devices. These could range from microcontrollers and gateways to specialized edge servers. Then, theres the batch processing engine, which is responsible for scheduling and executing the batch jobs. Finally, a centralized management system provides a unified interface for monitoring, managing, and orchestrating the entire process. This architecture allows for flexible deployment and management of batch jobs across a diverse range of IoT devices and environments.

Data ingestion is a crucial step in the remote IoT batch job process. The data generated by the IoT devices needs to be collected, formatted, and prepared for processing. This can involve various techniques, such as data filtering, aggregation, and transformation. The specific methods used will depend on the nature of the data and the requirements of the batch jobs. Security is of paramount importance during data ingestion. Encrypting data in transit and at rest, implementing access controls, and using secure communication protocols are essential to protect sensitive information from unauthorized access and tampering.

The scheduling and orchestration of remote IoT batch jobs are critical for ensuring efficient and reliable operation. The batch processing engine is responsible for scheduling the jobs based on predefined criteria, such as time windows, data availability, or event triggers. The orchestration component manages the execution of the jobs, monitors their progress, and handles any errors or failures that may occur. Robust scheduling and orchestration mechanisms are essential for ensuring that the jobs run on time and that the data is processed accurately. This often involves the use of workflow management systems that enable the design, execution, and monitoring of complex data pipelines.

Batch processing is the core of remote IoT batch jobs. It involves executing a series of operations on a set of data. These operations can include data cleaning, aggregation, analysis, and transformation. The choice of processing techniques will depend on the specific goals of the batch job. For example, statistical analysis techniques can be used to identify trends and patterns in the data, machine learning algorithms can be used to make predictions, and data visualization tools can be used to present the results in a clear and understandable format. The scalability of the batch processing engine is crucial. As the volume of data grows, the processing engine must be able to scale to handle the increased workload without compromising performance.

Error handling and fault tolerance are integral aspects of remote IoT batch jobs. In a distributed environment, failures can occur at any level, from the IoT devices to the processing engine. The system must be designed to gracefully handle these failures without disrupting the overall operation. This involves implementing mechanisms such as retries, error logging, and automatic failover. Furthermore, comprehensive monitoring and alerting are crucial for detecting and resolving issues promptly. The monitoring system should track the status of the jobs, the health of the devices, and the performance of the processing engine. Alerts should be triggered when critical events occur, enabling operators to quickly identify and address problems.

Consider the application of remote IoT batch jobs in the realm of smart agriculture. Sensors deployed across vast farmlands collect data on soil moisture, temperature, and crop health. This data can be processed locally on edge devices, enabling farmers to make informed decisions about irrigation, fertilization, and pest control. The batch jobs might involve analyzing historical data to predict future crop yields, or monitoring real-time data to detect and respond to anomalies. The ability to process data locally, close to the source, is vital in this context. It minimizes latency, ensures that critical decisions can be made even in the absence of a reliable internet connection, and reduces the overall cost of data transmission.

Another compelling use case is in the context of predictive maintenance for industrial equipment. Sensors attached to machinery collect data on vibration, temperature, and other critical parameters. This data is processed in batch jobs to detect potential failures before they occur. By analyzing historical and real-time data, the system can identify patterns and anomalies that indicate impending problems. This enables maintenance teams to schedule repairs proactively, minimizing downtime and reducing operational costs. The remote IoT batch jobs facilitate this by allowing processing to occur on edge devices or on-premises servers, reducing reliance on cloud connectivity and enabling quick responses to emerging issues.

In the transportation sector, remote IoT batch jobs play a critical role in fleet management. Sensors in vehicles collect data on location, speed, fuel consumption, and engine performance. This data can be processed in batch jobs to optimize routes, improve fuel efficiency, and monitor driver behavior. The batch jobs might involve analyzing historical data to identify areas for improvement, or monitoring real-time data to respond to unexpected events. The ability to process data locally allows for rapid decision-making, such as re-routing vehicles to avoid traffic congestion. Furthermore, it helps to maintain a high level of data privacy and security, especially when dealing with sensitive information such as driver location.

The energy sector benefits greatly from remote IoT batch jobs in the context of smart grids. Sensors deployed across the grid collect data on power consumption, voltage, and other parameters. This data can be processed in batch jobs to optimize energy distribution, detect and prevent outages, and integrate renewable energy sources. The batch jobs might involve analyzing historical data to predict energy demand, or monitoring real-time data to respond to fluctuations in supply and demand. The remote processing of this data allows for faster response times, enhances grid stability, and optimizes resource allocation.

The implementation of remote IoT batch jobs presents several technical challenges. One key challenge is the heterogeneous nature of IoT devices and the diverse communication protocols they employ. The system must be able to integrate with a wide variety of devices and protocols. Another challenge is the limited resources of edge devices. Edge devices typically have limited processing power, memory, and battery life. The batch jobs must be optimized to run efficiently on these resource-constrained devices. Furthermore, security is a crucial consideration. The system must be designed to protect data from unauthorized access, tampering, and denial-of-service attacks.

Several technologies and tools are essential for implementing remote IoT batch jobs. First, theres the edge computing platform, which provides the infrastructure for running batch jobs on edge devices. This platform might include edge gateways, edge servers, and specialized software. Then, theres the batch processing engine, which is responsible for scheduling and executing the batch jobs. Popular choices include Apache Spark and Apache Flink, known for their ability to handle large volumes of data. Cloud platforms such as AWS IoT Greengrass, Azure IoT Edge, and Google Cloud IoT Edge provide services and tools specifically designed for managing and running IoT batch jobs. These platforms offer features like device management, data ingestion, and data processing. Furthermore, containerization technologies like Docker and Kubernetes simplify the deployment and management of batch jobs across a distributed environment.

Security is paramount in remote IoT batch job implementations. Data breaches can have significant consequences, so organizations must take appropriate security measures. This includes implementing strong authentication and authorization mechanisms, encrypting data in transit and at rest, and regularly updating software and firmware to address known vulnerabilities. The implementation of a robust security framework, such as the NIST Cybersecurity Framework, helps to identify, protect, detect, respond to, and recover from security incidents. Furthermore, security audits and penetration testing should be conducted regularly to identify and address potential weaknesses. The use of secure communication protocols, such as TLS/SSL, is crucial to protect data in transit.

Scalability is another critical aspect of remote IoT batch jobs. As the volume of data generated by IoT devices grows, the system must be able to scale to handle the increased workload. This involves designing the system to handle increased data ingestion rates, scaling the processing engine to accommodate the increased processing demands, and ensuring that the storage infrastructure can handle the increased data volumes. Horizontal scaling, which involves adding more resources to the system, is often used to address scalability challenges. This might involve adding more edge devices, scaling up the batch processing engine, or increasing the storage capacity.

The economic benefits of remote IoT batch jobs are substantial. By enabling distributed processing, organizations can reduce bandwidth costs and minimize the need for expensive centralized processing infrastructure. Furthermore, optimizing resource utilization can lead to significant cost savings. The ability to process data closer to the source also reduces latency, which can improve operational efficiency and enable real-time decision-making. This can lead to increased productivity, reduced downtime, and improved customer satisfaction. In addition, by leveraging data analytics, organizations can identify new revenue streams and optimize their business processes.

Looking ahead, the future of remote IoT batch jobs is bright. As the number of connected devices continues to grow exponentially, the need for efficient and scalable data processing solutions will become even more critical. Emerging technologies, such as 5G, will further enhance the capabilities of remote IoT batch jobs by providing faster and more reliable communication. Artificial intelligence (AI) and machine learning (ML) will play an increasingly important role in automating data processing tasks and enabling advanced analytics. In addition, the integration of edge computing with cloud platforms will create hybrid architectures that combine the benefits of local processing with the scalability and flexibility of the cloud.

The ethical considerations surrounding remote IoT batch jobs are important. Data privacy and security are paramount, and organizations must comply with all applicable regulations, such as GDPR and CCPA. Transparency is also crucial. Organizations should be transparent about how they collect, use, and store data. Furthermore, the potential for bias in data and algorithms must be addressed. Organizations should take steps to ensure that their data and algorithms are free from bias and that they do not discriminate against any group of people. Finally, the responsible use of data and technology is essential to ensure that remote IoT batch jobs are used to benefit society as a whole.

Ultimately, the success of remote IoT batch jobs depends on careful planning, design, and execution. Organizations must clearly define their objectives, choose the right technologies and tools, and implement robust security measures. They must also be prepared to adapt to changing requirements and embrace new technologies. The ability to leverage data effectively will be a key differentiator in the IoT landscape. Organizations that can successfully implement and manage remote IoT batch jobs will be well-positioned to capitalize on the opportunities presented by the Internet of Things.

The continuous evolution of remote IoT batch jobs will further enhance the capabilities of organizations operating in the connected world. The ability to process data at the edge, coupled with the advancements in AI and ML, will enable even more sophisticated applications. These advancements will contribute to enhanced efficiency, improve decision-making, and create new value across a wide array of industries. As the IoT landscape expands, the strategic implementation of remote IoT batch jobs will become an essential element for organizations seeking to stay competitive and maximize the value derived from their connected devices.